Tags

3.2.2, Azure, Binary object, BLOB, configuration, Fluent Bit, use cases

When I first heard about Fluent Bit introducing the support binary large objects (BLOBs) in release 3.2. I was a bit surprised; often, handling such data structures is typical, and some might see it as an anti-pattern. Certainly, trying to pass such large objects through the buffers could very quickly blow up unless buffers are suitably sized.

But rather than rush to judgment, the use cases for handling blobs became clear after a little thought. First of all, there are some genuine use cases. The scenarios I’d look to blobs to help are for:

- Microsoft applications can create dump files (.dmp). This is the bundling of not just the stack traces but the state, which can include a memory dump and contextual data. The file is binary in nature, and guess what? It can be rather large.

- While logs, traces, and metrics can tell us a lot about why a component or application failed, sometimes we have to see the payload that is being processed – is there something in the data we never anticipated? There are several different payloads that we are handling increasingly even with remote and distributed devices, namely images and audio. While we can compress these kinds of payloads, sometimes that isn’t possible as we lose fidelity through compression, and the act of compression can remove the very artifact we need.

Real-world use cases

This later scenario I’d encountered previously. We worked with a system designed to send small images as part of product data through a messaging system, so the data was disturbed by too many endpoints. A scenario we encountered was the master data authoring system, which didn’t have any restrictions on image size. As a result, when setting up some new products in the supply chain system, a new user uploaded the ultra-high-resolution marketing images before they’d been prepared for general use. As you can imagine, these are multi-gigabyte images, not the 10s or 100s of kilobytes expected. The messaging’s allocated storage structures couldn’t cope with the payload.

We had to remotely access the failure points at the time to see what was happening and realize the issue. While the environment was distributed, it wasn’t as distributed as systems can be today, so remote access wasn’t so problematic. But in a more distributed use case, or where the data could have been submitted to the enterprise more widely, we’d probably have had more problems. Here is a case where being able to move a blob would have helped.

A similar use case was identified in the recent Release Webinar presented by Eduardo Silva Pereira, and a use case with these characteristics was explained. With modern cars, particularly self-driving vehicles, being able to transfer imagery back in the event navigation software experiences a problem is essential.

Avoid blowing up buffers.

To move the Blob without blowing up the buffering, the input plugin tells the blob-consuming output plugin about the blob rather than trying to shunt the GBs through the buffer. The output plugin (e.g., Azure Blob) takes the signal and then copies the file piece by piece. By consuming their blob in parts, we reduce the possible impacts of network disruption (ever tried to FTP a very large file over a network for the connection to briefly drop, as a result needing to from scratch?). The sender and receiver use a database table to track the communication and progress of the pieces and reassemble the blob. Unlike other plugins, there is a reverse flow from the output plugin back to the blob plugin to enable the process to be monitored. Once complete, the input plugin can execute post-transfer activities.

This does mean that the output plugin must have a network ‘line of sight’ to the blob when this is handled within a single Fluent Bit node – but it is something to consider if you want to operate in a more distributed model.

A word to the wise

Binary objects are known to be a means by which malicious code can easily be transported within an organization. This means that while observability tooling can benefit from being able to centralize problematic data for us to examine further, we could unwittingly help a malicious actor.

We can protect ourselves in several ways. Firstly, we must first understand and ensure the source location for the blob can only contain content that we know and understand. Secondly, wherever the blob is put, make sure it is ring-fenced and that the content is subject to processes such as malware detection.

Limitations

As the blob is handled with a new payload type, the details transmitted aren’t going to be accessible to any other plugins, but given how the mechanism works, trying to do such things wouldn’t be very desirable.

Input plugin configuration

At the time of writing, the plugin configuration details haven’t been published, but with the combination of the CLI and looking at the code, we do know the input plugin has these parameters:

| Attribute Name | Description |

|---|---|

| path | Location to watch for blob files – just like the path for the tail plugin |

| exclude_pattern | We can define patterns that exclude files other than our blob files. The pattern logic, is the same as all other Fluent Bit patterns. |

| database_file | These are the same options as upload_success_action but are applied if the upload fails. |

| scan_refresh_interval | These are the same options as upload_success_action but are applied if the upload fails. |

| upload_success_action | This is a value that tells the plugin what to do, when successful. The options are: 0. Do nothing – the default action if no option is provided. delete (1). Delete the blob file add_suffix (2). Emit a Fluent Bit log record emit_log (3). Add suffix to the file – as defined by upload_success_suffix |

| upload_success_suffix | If the upload success_action is set to use a suffix, then the value provided here will be used as the suffix. |

| upload_success_message | This text will be incorporated into the Fluent Bit logs |

| upload_failure_action | These are the same options as upload_success_action but applied if the upload fails. |

| upload_failure_suffix | This is the failure version of upload_success_suffix |

| upload_failure_message | This is the failure version of upload_success_message |

Output Options

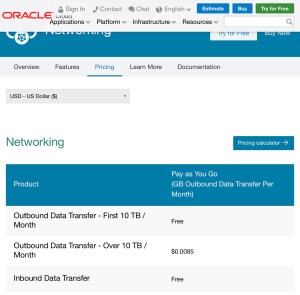

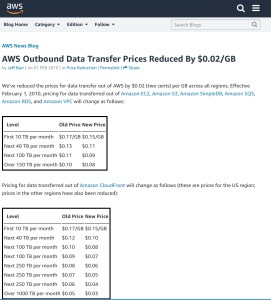

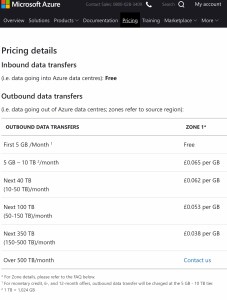

Currently, the only blob output option is for the Azure Blob output plugin that works with the Azure Blob service, but support through using the Amazon S3 standard is being worked on. Once this is available, the feature will be widely available as the S3 standard is widely supported, including all the hyperscalers.

Note

The configuration information has been figured out by looking at the code. We’ll return to this subject when the S3 endpoint is provided and use something like Minio to create a local S3 storage capability.

That consistency and predictability are important not just for code if you look at any

That consistency and predictability are important not just for code if you look at any

The problems start because RAC has some platform requirements (disk sharing either virtual or physical) that can’t be offered by all cloud (IaaS) that can be typically established with on premise hardware such as a SAN. Microsoft Azure has one of these very issues meaning it presently can’t run RAC (see

The problems start because RAC has some platform requirements (disk sharing either virtual or physical) that can’t be offered by all cloud (IaaS) that can be typically established with on premise hardware such as a SAN. Microsoft Azure has one of these very issues meaning it presently can’t run RAC (see  The second consideration that tends to get overlooked is data centre level DR. It is very easy to forget regardless how good the data centre is with precautions and redundancy there are some events that can bring a centre down. Even the most sophisticated monitoring and live VM movement can’t avoid the data centre level problems. There are well published illustrations of such issues, the best known are those Amazon have had (probably because it has hit some many customers – Amazon’s own analysis of one event

The second consideration that tends to get overlooked is data centre level DR. It is very easy to forget regardless how good the data centre is with precautions and redundancy there are some events that can bring a centre down. Even the most sophisticated monitoring and live VM movement can’t avoid the data centre level problems. There are well published illustrations of such issues, the best known are those Amazon have had (probably because it has hit some many customers – Amazon’s own analysis of one event

You must be logged in to post a comment.