Tags

architecture, GDPR, ISO, law, PCI

When we start our IT career (and depending on how long ago you started), the idea of software and legislation seemed pretty remote; the only rules you might have to contend with were your local development standards. As an architect today, that is far from the case, as the saying goes, you need to be a ‘Jack of all Trades’. You don’t need to be a lawyer, but you have to have a grasp of legislation and agreements that can impact, and recognise when it is time to talk to the legal eagles.

I thought it worthwhile calling out the different things we need to have a handle on, based on my experience. There will always be domain-specific laws, but the following are largely universal..

- Software licenses—Today, we rarely build a solution without using a library, package, utility, or even a full application we haven’t written ourselves.

- But what we can and can’t do with that third-party asset or reasonably expect from it, provided the resource is provided, is dictated by a license, explicit or implicit. Consider the implications of an Apache license compared to a Creative Commons Share-Alike. In terms of negative impact, open source licenses can at worst…

- Prevent code from being used commercially or to provide commercial services (several software vendors, such as Elastic and Hashicorp, have adopted this).

- Require you to share whatever you develop using open-source libraries

- Declare your use of libraries (remember, such information can provide clues on possible attack vectors).

- Fortunately, licenses for software solutions under several organizational umbrellas, such as the Linux Foundation (and its subsidiary organizations, such as the CNCF), require the projects to adopt a permissive licensing model.

- Commercial licenses can come into play as well. The Open Source model often involves the key contributing organizations offering services such as support and training, or extended features. A|ttractive for larger organizations so that they have a fallback and access to specialist resources. However, we also have products that only exist commercially. Understanding the licensing position of these tools is essential – for example, Oracle database, where you pay for production deployments by the number of CPUs, but non-production deployments are free. Such licensing may have material on the architecture, for example, minimizing the amount of non-DB compute effort on those nodes that take place, and sizing your solution such that you have more CPUs but with less power to provide better resilience. In terms of negative impacts…

- You can become exposed to unplanned license costs that hadn’t been planned.

- Undermine the solution’s cost-benefit

- But what we can and can’t do with that third-party asset or reasonably expect from it, provided the resource is provided, is dictated by a license, explicit or implicit. Consider the implications of an Apache license compared to a Creative Commons Share-Alike. In terms of negative impact, open source licenses can at worst…

- GDPR – There are many variations of the General Data Protection Regulation (GDPR), but most have taken GDPR as a foundation. Covering concepts of the right to know and correct data held about an individual, disclosure as to personal data use, and the right to be forgotten are essential. There are resources available that cover which laws apply where. The negative impacts…

- Additional development processes and administration to create evidence of compliance (eg, audit of access to data)

- Additional costs to satisfy compliance, e.g, regular mandatory training for all developers that could be impacted

- Several acts, such as the US Cloud Act, can also impact the choices of service providers when using hosting, such as cloud providers. This highlights an interesting factor to keep in mind: legislation from other countries can still impact the situation even if the solution will not be used in that country. Impacts could be…

- Using sovereign cloud and any associated costs.

- Solution options are controlled by the availability of sovereign cloud services.

- Limit the use of managed services to make the solution portable to different sovereign clouds.

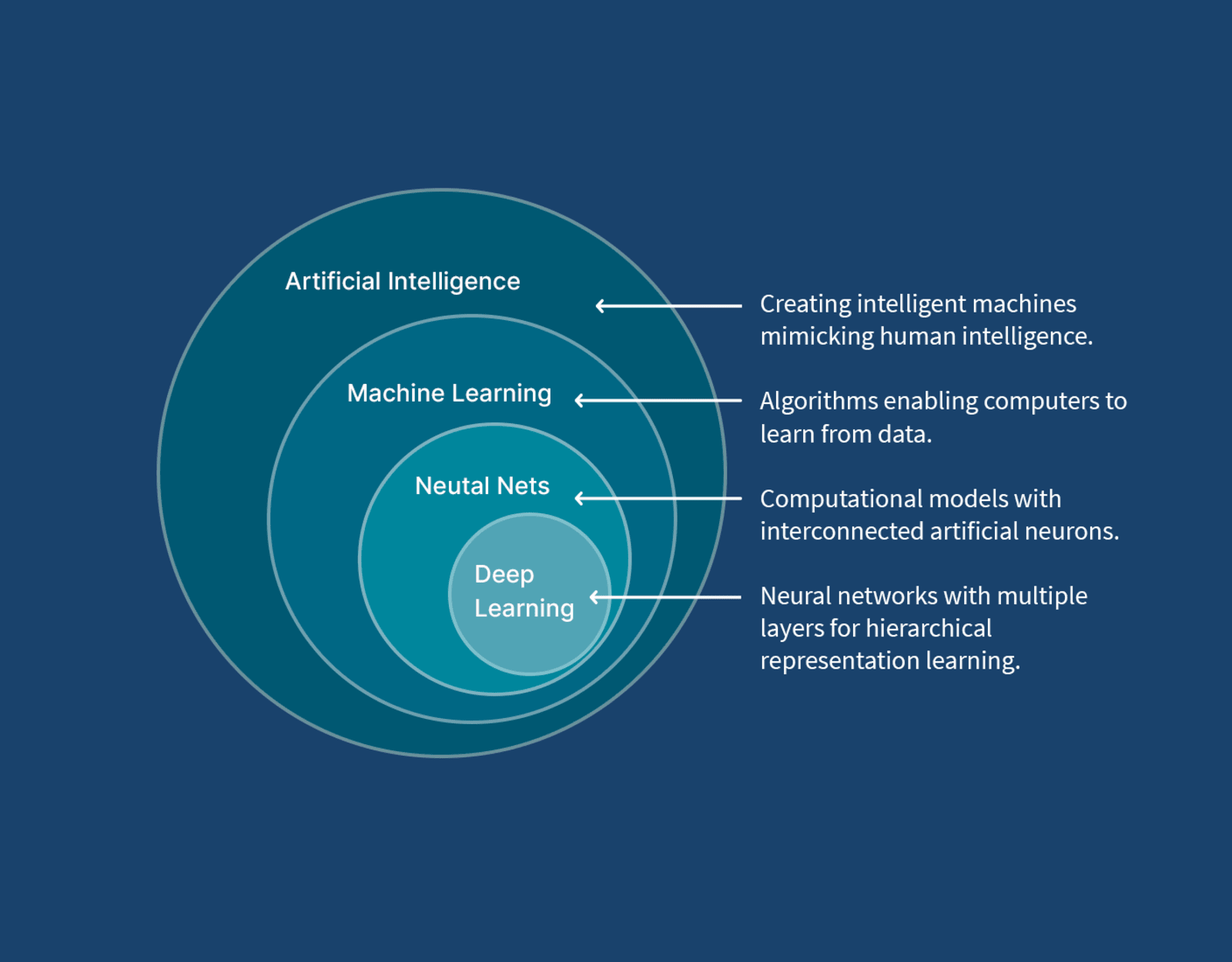

- AI and ML are rapidly evolving areas of legislation. The EU has been proactive in this space with the AI Act. However, secondary legislative factors exist, such as intellectual property law. While we may not all be directly involved in training LLMs, we still need to understand the ramifications and the data we work with. Possible impacts can include…

- Data source assurance processes.

- PCI—While the Payment Card Industry (PCI) does not have legal standing, its impact is broad and substantial, so we might as well treat it as such. The exact rules PCI requires depend on whether you’re an organization providing the use and storage of cards or a service provider.

- In areas like PCI, while not strictly legislation, certain domain compliances demand compliance with various standards, perhaps the most pervasive of these is ISO27001, which covers information security across the spectrum of business/commercial considerations, but extends to infrastructure, software, and its development IT. Understanding this and standards such as SOC 1, SOC 2, and SSAE16 (now 18 and 22) are essential to understand, as these are standards you need to determine if they are important to you when considering cloud and SaaS services, particularly. Things have improved over time, but we have encountered specialist managed/cloud services where the providers are unaware of such standards and have no position or evidence of addressing some of the expectations set out by SOC1 and SOC2.

- If you work for a software vendor, exportation law can impact your business, particularly when the solution involves complex algorithms such as those used in encryption.

These points primarily focus on ‘universal truths’, but there are domain-specific laws and expected standards that can be considered in the same or similar light. As with all domains, there are specialist legislation requirements like the Digital Operational Resilience Act (DORA) that impact financial businesses and Consumer Protection (Distance Selling) for e-tail.

Some useful resources:

icon on the

icon on the

You must be logged in to post a comment.