Tags

bootlegs, box set, collection, collector, color, discogs, Music, news, rare vinyl, records, RSD, vinyl, vinyl-records

Buying vinyl as a gift for a loved one can be tricky if you’re not an aficionado. Buying vinyl generally is easy – just hitting a box shifter like Amazon can do it. However, in most cases, you will only get a generic pressing for mainstream artists, and won’t receive something collectible.

This isn’t a hint for me, as I always keep a list of suggestions for those significant dates. As I’m not the only vinyl fan in our extended family, I thought I’d share the thinking I go through – or at least that is how this post started out.

New releases

From a collector’s perspective, like books generally, 1st issues are more collectible than later additional pressings or reissues. Often, reissues will be on standard black vinyl with a standard sleeve. There are some things where reissues are worth considering, and we’ll come back to this.

In the last couple of years, new releases have seen multiple versions being made available. The versions differ in two ways. Firstly,y special editions will come with extra tracks, typically these tracks are:

- Alternate mixes result from how the song is put together in the studio.

- Demos, early versions that artists have put together before entering the studio to produce the song properly. In some cases, these versions can turn out to be better than the final production (as was the case with Norah Jones’ debut album).

- Live performances, artists often record their own shows, even if it’s just to review and improve.

- B-sides, when vinyl and CD singles were dominant, you would have multiple additional tracks. It was once common for an artist to record 20 or more songs for an album. Ten or so tracks would make the album, and others would be included as B-sides.

- A recent development is the emergence of different audio mixes, such as 5.1 or 7.1 mixes, which often accompany Blu-ray Disc releases.

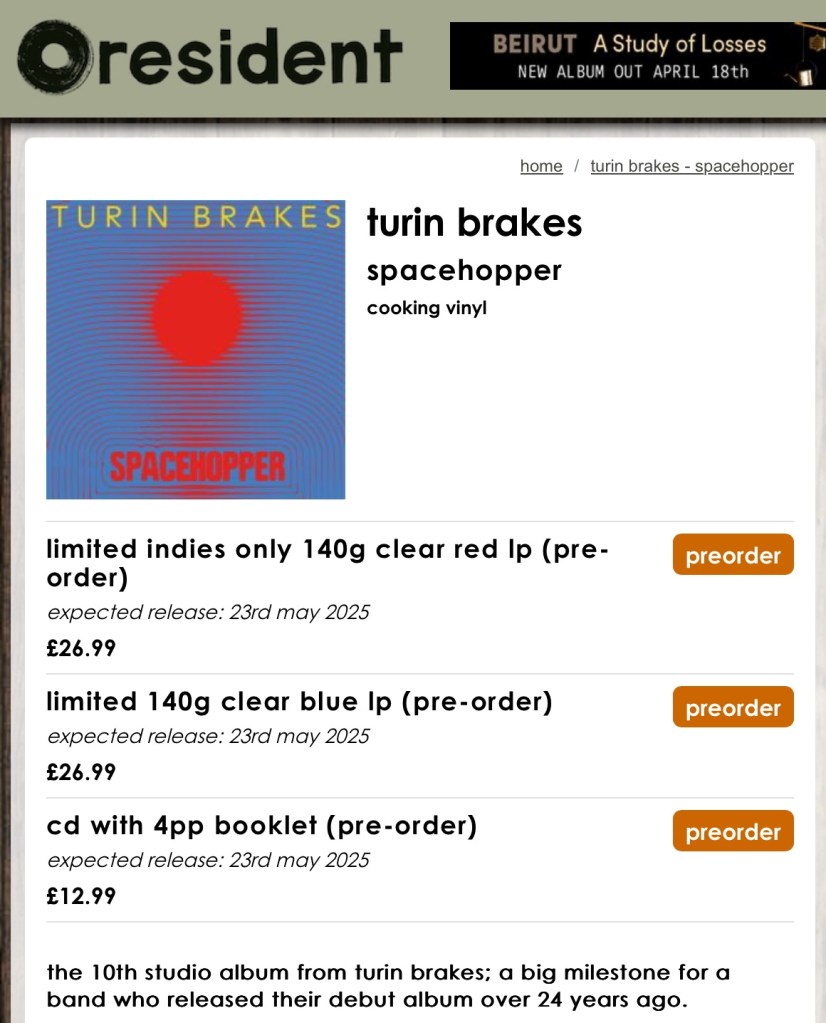

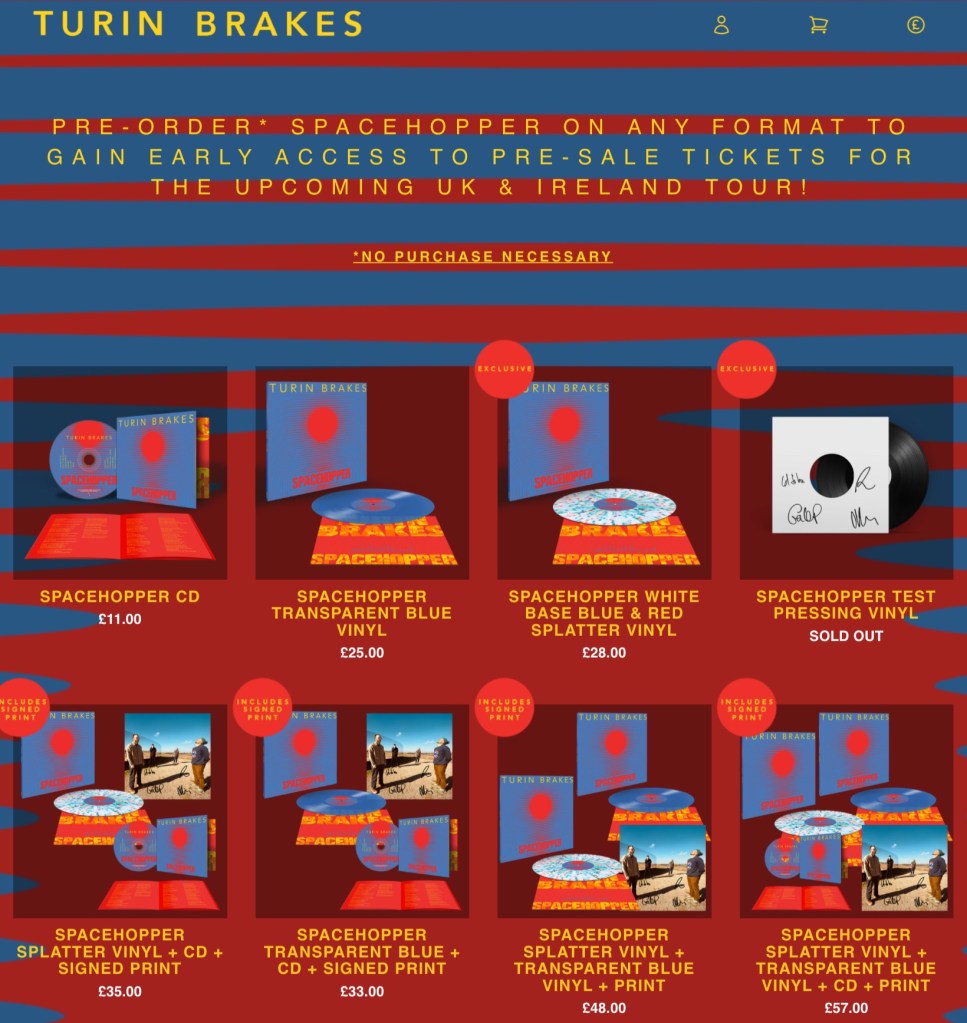

The most common variations are the different coloured vinyl. Indie record stores often offer a limited run of coloured vinyl. Sometimes, even picture discs, and in recent years, Zoetrope art and etched album sides. These are harder and more expensive to produce, so they are rarer, often limited runs, so more collectible.

Coloured Vinyl sources

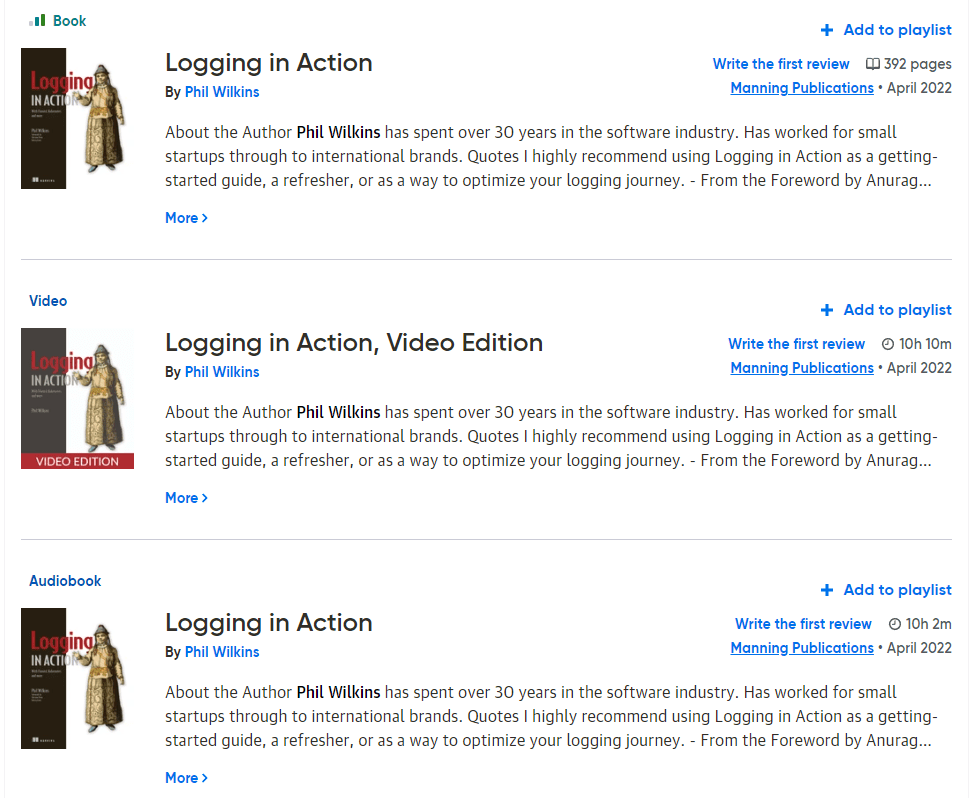

As just mentioned, artists and record labels have supported independent record stores by providing not only the standard black, but also coloured vinyl versions. This has expanded in recent years to having special colors being offered to fans through streaming services such as Spotify and artist websites.

Resident Music details for the new Turin Brakes album, vs the band’s (label-managed) website

Sadly, this approach has been adopted by some very successful artists to entice their loyal fans to buy multiple copies of the same album, with even Amazon getting its own special, colored vinyl. I personally feel Taylor Swift having more than 45 versions of one album is somewhat exploitative of her fan base.

Numbered Editions

We’ve mentioned that often, the colored vinyl runs will be limited. How limited it can vary, so when a release is listed as a limited edition, it is worth checking if a number of copies is identified. These releases will also have numbering printed and written onto them. How many in the run will influence that value? Some runs can be as many as 10,000. For a popular artist, it’s still relatively rare, but not for a smaller name. Others will be as low as 500 copies. So, consider the artist’s popularity when looking at the numbering.

Dinked Editions

In the U.K., a group of indie record stores have been working with smaller indie artists to release ‘Dinked Editions‘ of albums. These versions have been developed with the artists, and often have different album covers, additional tracks on a supplemental single, and will be numbered as part of a limited edition. These are usually numbered and have between 500 and 1000 copies.

Signed artwork

Artists signing the albums will always make the records more collectible. But, signing sleeves can be problematic (doing it once an album is packaged means a lot of weight has to be transferred around). Sign the sleeve before adding the record, as this may impact production. As a result, sadly, the signed piece is a separate art card. So a signed, genuine sleeve will always be more collectible.

Record Store Day / National Album Day

Record Store Days (RSDs) have been going for approaching 20 years. Started as a lifeline to help keep independent record stores stay alive when a lot of stores were closing down. RSD releases are usually limited runs, where just enough copies are produced to sell in stores on a specific date. RSD releases tend to go beyond just coloured vinyl to artists releasing ‘new’ (sometimes older but previously unreleased, remastered, or demo material).

While RSD releases are aimed at physical instore sale events, on the Monday evening after the RSD stores can then sell their RSD overstock online. Occasionally, you may find a store with an odd copy lingering well after the RSD event, but these are relatively rare.

So getting an RSD release involves a bit of luck and timing. Being willing to queue at a store will boost the chances of getting the desired RSD release. It does help in advance of the RSD day, the releases for the event are published, so you know in advance what to get.

RSDs normally take place on a Saturday in April and have become a bit of an event. So, if you’re not that interested in the music you’re buying for someone, you’re better off gambling on a store having it online on the following Monday at 8pm. Most indie stores have online sales channels (often this is how they survive during quiet times), so bookmark several to try.

Unlike RSD, National Album Day isn’t a global setup, although there are similarities National Album Day currently doesn’t carry the same impact.

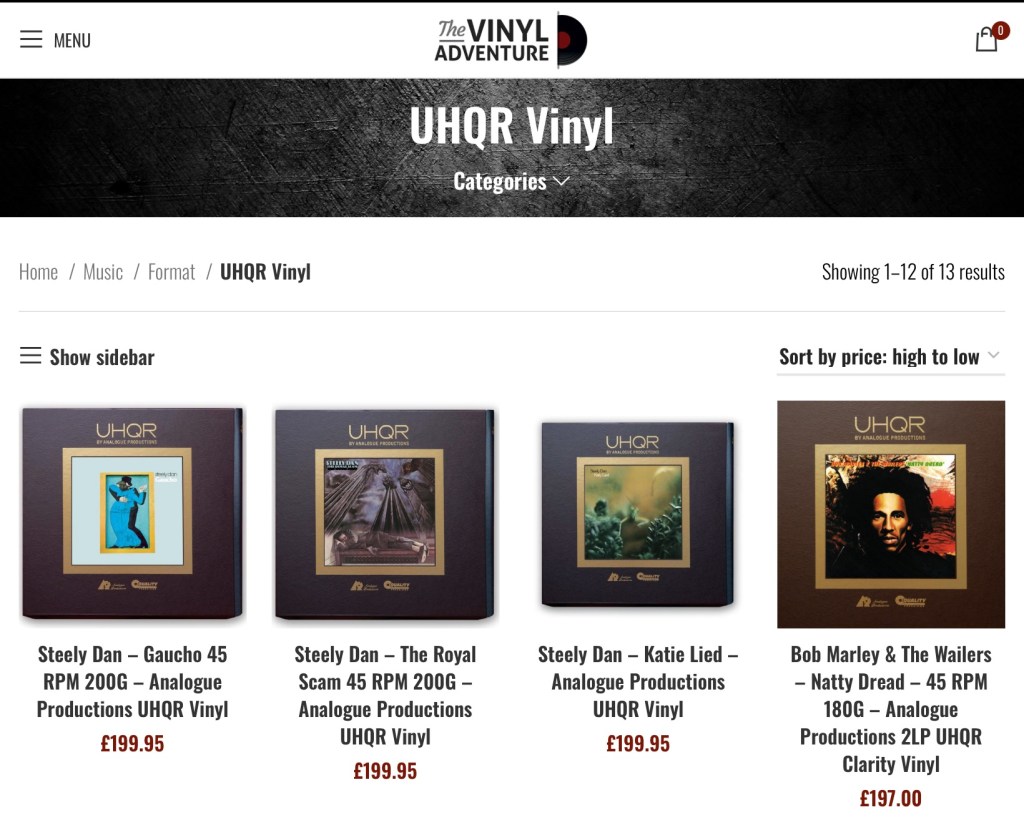

Vinyl weight and Audiophile pressings

It is worth keeping an eye on the vinyl weight referenced. Good-quality releases are typically 180 g, although 140 g is not uncommon; standard releases can be as low as 100-120 g. The heavier the vinyl, the less susceptible it is to warping, and any surface scuffing is less likely to impact sound reproduction.

There is a correlation between vinyl weight and vinyl quality, with some specialist pressings over 180 g. These come from specialist companies such as Mobile Fidelity Sound Lab. These releases will also feature albums playing back at 45 RPM, as this effectively adds 50% more groove length to the recording. These releases can cost multiples of a standard release. Such purchases will pay off when used with an audiophile setup.

Box sets

Album box sets can be an attractive vinyl gift option, but can be rather expensive for the quantity of music that can be included. Not all box sets are published as Limited Editions, but in most cases, they can be considered so because the number of buyers prepared to spend hundreds of pounds on a small set of new actual material. For example, Rush R50 has 7 new previously recorded tracks with a 234.99 price tag.

While many box sets focus on a single release title, e.g., Peter Gabriel’s I/O or Genesis’ 50th Anniversary edition of Lamb Lies Down On Broadway. Another example is the new Springsteen’s Tracks II priced at nearly £300, but it does contain 7 albums worth of new material.

Vinyl Bootlegs

The recovery of vinyl has also driven the bootleg market. Bootleg (sometimes referred to as Recordings Of Illegitimate Origin – ROIO) really took off with CDs, as the production costs are low, and even more with downloading. While downloading has dented the value of the market. What it doesn’t replace is the sleeves and artwork that can go into such releases.

When it comes to the legality of such recordings, some legal loopholes in the U.K. can give sufficient legitimacy to the releases, which is why they do show up in record stores.

There is an important consideration when buying bootlegs, which is the lineage of the recording. For example, a recording taken from an FM broadcast is transferred to a cheap cassette before being transferred to vinyl. Some labels, and bootleg series have a reputation for quality lineage, such as Transmission Impossible.

Sometimes, a bootleg recording of a specific performance is worth having, regardless of its quality. But to know this requires research and understanding of the performances and bootlegging labels.

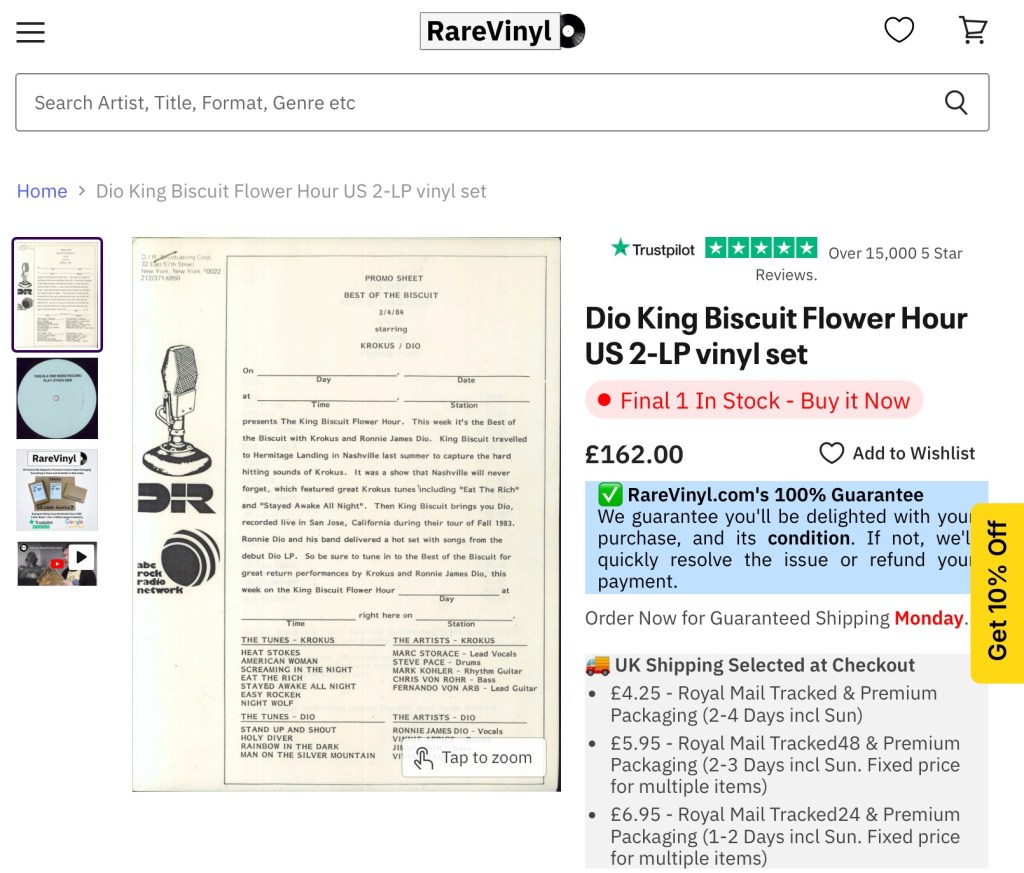

Rare Vinyl

Like rare books, rare record buying can need an understanding of what is valuable and what is not. Many factors can influence value, factors such as:

- Flawed productions or label printing,

- Original pressings and pressings of the wrong version of an album (different mixes etc.).

- Alternate sleeves, or where early releases had gatefold sleeves, but later changed to have a conventional sleeve.

- Artists signing the sleeve.

- First pressings of some albums.

In addition to these points, all the previous considerations, such as numbering, limited issues, coloured vinyl, etc., what is important is evidence of authenticity. Some details such as matrix codes etched in the vinyl, which can help identify specific versions (such information can be found on sites like Discogs).

However, there are some easy value propositions, such as pressed broadcasts like King Biscuit Flower Hour and BBC Top of the Pops, which had limited pressings made so that the vinyl copies could be distributed to regional radio stations.

Understanding the value and pricing becomes easier when you understand how second-hand vinyl is graded. The sleeves and vinyl are graded separately. Grading goes from mint (like new), near mint (NM), very-good (VG), excellent (Ex), and so on. Personally, I would focus on VG or better, except in some exceptional cases (a more precise definition can be seen here).

Finding Your Indie Store

As I’ve mentioned Indie record stores several times, the question becomes, where are they, how do I find them? Well, most cities these days will have an indie store, but they aren’t usually on the high street. You’ll need to find them. Of course, a LOT (not all) are also online. The easiest places to locate your nearest indie store are via:

- Google search with something like ‘indie record stores‘.

- RSD Record Shop Locator

- Discogs map

Conclusion

We’ve covered a lot of possibilities here. However, this is far from exhaustive, and we will follow up with another post that explores other ideas, although not all of them involve vinyl.

icon on the

icon on the

You must be logged in to post a comment.