Now that details of the product I’ve been involved with for the last 18 months or so are starting to reach the public domain (such as the recent announcement at the UN General Assembly on September 25), I can talk to a bit about what we’ve been doing. Oracle’s Digital Government Global Industry Unit has been working on a solution that can help governments address the questions of food security.

So what is food security? The World Food Programme describes it as:

Food security exists when people have access to enough safe and nutritious food for normal growth and development, and an active and healthy life. By contrast, food insecurity refers to when the aforementioned conditions don’t exist. Chronic food insecurity is when a person is unable to consume enough food over an extended period to maintain a normal, active and healthy life. Acute food insecurity is any type that threatens people’s lives or livelihoods.

World Food Programme

By referencing the World Food Programme, it would be easy to interpret this as a 3rd world problem. But in reality, it applies to just about every nation. We can see this, with the effect the war in Ukraine has had on crops like Wheat, as reported by organizations such as CGIAR, European Council, and World Development journal. But global commodities aren’t the only driver for every nation to consider food security. Other factors such as Food Miles (an issue that perhaps has been less attention over the last few years) and national farming economics (a subject that comes up if you want to it through a humour filter with Clarkson’s Farm to dry UK government reports and US Department of Agriculture.

Looking at it from another perspective, some countries will have a notable segment of their export revenue coming from the production of certain crops. We know this from simple anecdotes like ‘for all the tea in China’, coffee variants are often referred to by their country of origin (Kenyan, Columbian etc.). For example, Palm Oil is the fourth-largest economic contributor in Malaysia (here).

So, how is Oracle helping countries?

One of the key means of managing food security is understanding food production and measuring the factors that can impact it (both positively and negatively), which range from the obvious—like weather (and its relationship to soil, water management, etc.) —to what crop is being planted and when. All of which can then be overlayed with government policies for land management and farming subsidies (paying farmers to help them diversify crops, periodically allowing fields to go fallow, or subsidizing the cost of fertilizer).

Oracle is a technology company capable of delivering systems that can operate at scale. Technology and the recent progress in using AI to help solve problems are not new to agriculture; in fact, several trailblazing organizations in this space run on Oracle’s Cloud (OCI), such as Agriscout. Before people start assuming that this is another story of a large cloud provider eating their customers’ lunch, far from it, many of these companies operate at the farm or farm cooperative level, often collecting data through aerial imagery from drones and aircraft, along with ground-based sensors. Some companies will also leverage satellite imagery for localized areas to complement these other sources. This is where Oracle starts to differentiate itself – by taking high-resolution imagery (think about the resolution level needed to differentiate Wheat and Maize, or spot rice and carrots, differentiate an orchard from a natural copse of trees). To get an idea, look at Google Earth and try to identify which crops are growing.

We take the satellite multi-spectral images from each ‘satellite over flight’ and break it down, working out what the land is being used for (ruling out roads, tracks, buildings, and other land usage). To put the effort to do this into context, the UK is 24,437,600,000 square meters and is only 78th in the list of countries by area (here). It’s this level of scale that makes it impractical to use more localized data sources (imagine how many people and the number of drones needed to fly over every possible field in a country, even at a monthly frequency).

This only solves the 1st step of the problem, which is to tell us the total crop growing area. It doesn’t tell us whether the crop will actually grow well and produce a good yield. For this, you’re going to need to know about weather (current, forecast, and historic trends), soil chemical composition and structure, and information such as elevation, angle, etc. Combined with an understanding of optimal crop growing needs (water levels, sun light duration, atmospheric moisture, soil types and health) – good crops can be ruined by it simply being too wet to harvest them, or store them dryly. All these factors need to be taken into account for each ‘cell’ we’re detecting, so we can calculate with any degree of confidence what can be produced.

If this isn’t hard enough, we need to account for the fact that some crops may have several growing seasons per year, or succession planting is used, where Carrots may be grown between March and June, followed by Cucumbers through to August, and so on.

Using technology

Hopefully, you can see there are tens of millions of data points being processed every day, and Oracle’s data products can handle that. As a cloud vendor, we’re able to provide the computing scale and, importantly, elasticity, so we can crunch the numbers quickly enough that users benefit from the revised numbers and can work out mitigation actions to communicate to farmers. As mentioned, this could be planning where to best use fertilizer or publishing advice on when to plant which crops for optimal growing conditions. In the worst cases recognizing there is going to be a national shortage of a staple crop and start purchasing crops from elsewhere and ensure when the crops arrive in ports they get moved out to the markets (like all large operations – as we saw with the Covid crises – if you need to react quickly, more mistakes can be made, costs grow massively driven by demand).

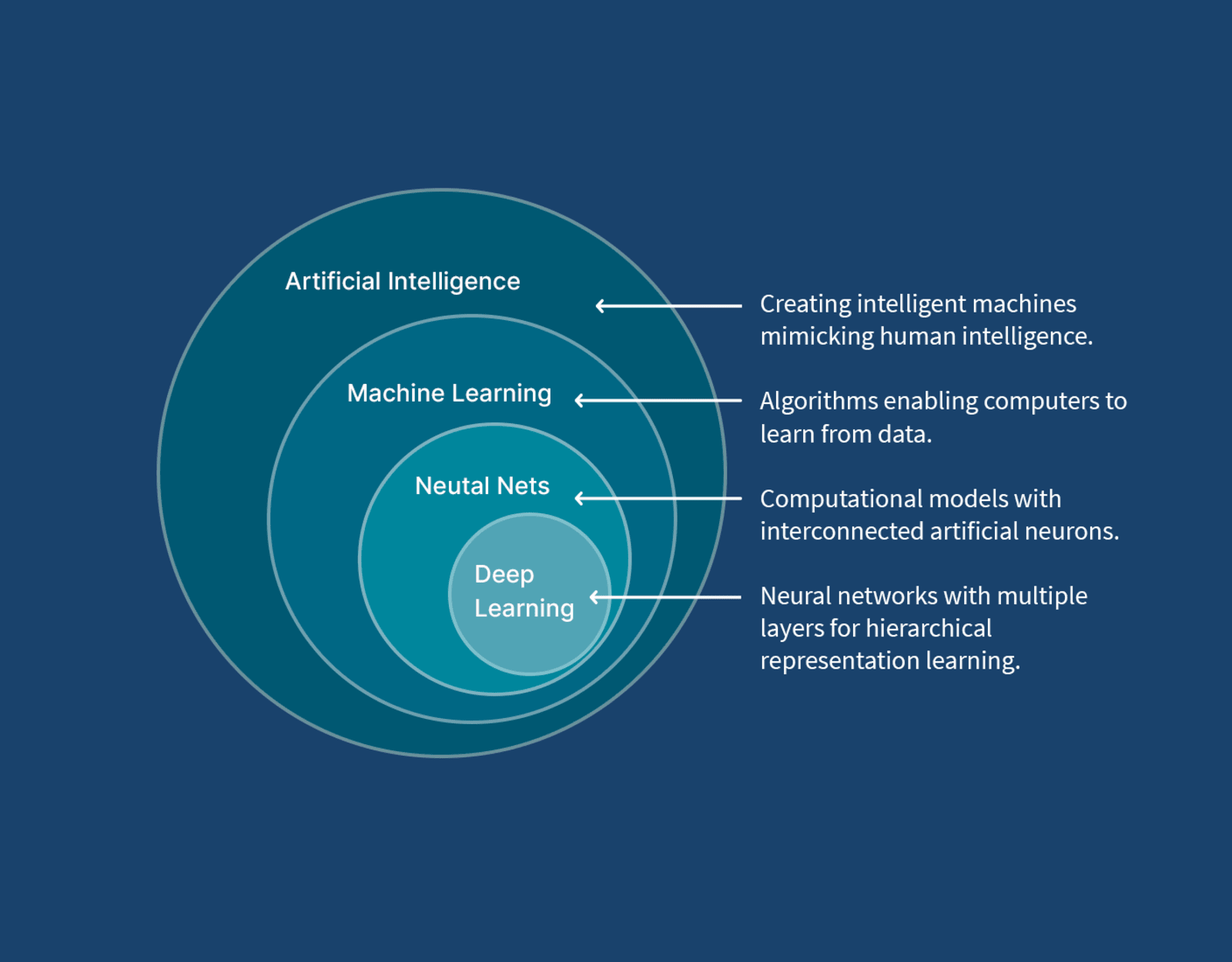

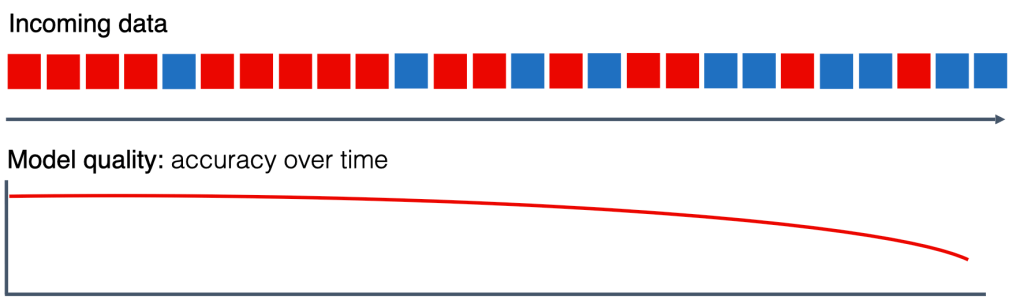

I mentioned AI, if you have more than the most superficial awareness of AI, you will probably be wondering how we use it, and the problems of AI hallucination – the last thing you want is a being asked to evaluate something and hallucinating (injecting data/facts that are not based on the data you have collected) to create a projection. At worst, this would mean providing an indication that everything is going well, when things are about to really go wrong. So, first, most of the AI discussed today is generative, and that is where we see issues like hallucinations. We’re have and are adopting this aspect of AI where it fits best, such as explainability and informing visualization, but Oracle is making heavy use of the more traditional ideas of AI in the form of Machine Learning and Deep Learning which are best suited to heavy numerical computational uses, that is not to say there aren’t challenges to be ddressed with training the AI.

Conclusion

When it comes to Oracle’s expertise in the specialized domains of agriculture and government, Oracle has a strong record of working with governments and government agencies from its inception. But we’ve also worked closely with the Tony Blair Institute for Global Change, which works with many national government agencies, including the agriculture sector.

My role in this has been as an architect, focused primarily on applying integration techniques (enabling scaling and operational resilience, data ingestion, and how our architecture can work as we work with more and more data sources) and on applying AI (in the generative domain). We’re fortunate to be working alongside two other architects who cover other aspects of the product, such as infrastructure needs and the presentation tier. In addition, there is a specialist data science team with more PhDs and related awards than I can count.

Oracle’s Digital Government business is more than just this agriculture use case; we’ve identified other use cases that can benefit from the data and its volume being handled here. This is in addition to bringing versions of its better-known products, such as ERP, Healthcare (digital health records management, vaccine programmes, etc.), national Energy and Water (metering, infrastructure management, etc).

For more on the agricultural product:

You must be logged in to post a comment.